Chalearn LAP IsoGD Database

News: the test labels and related scripts are released: [download]

Introduction

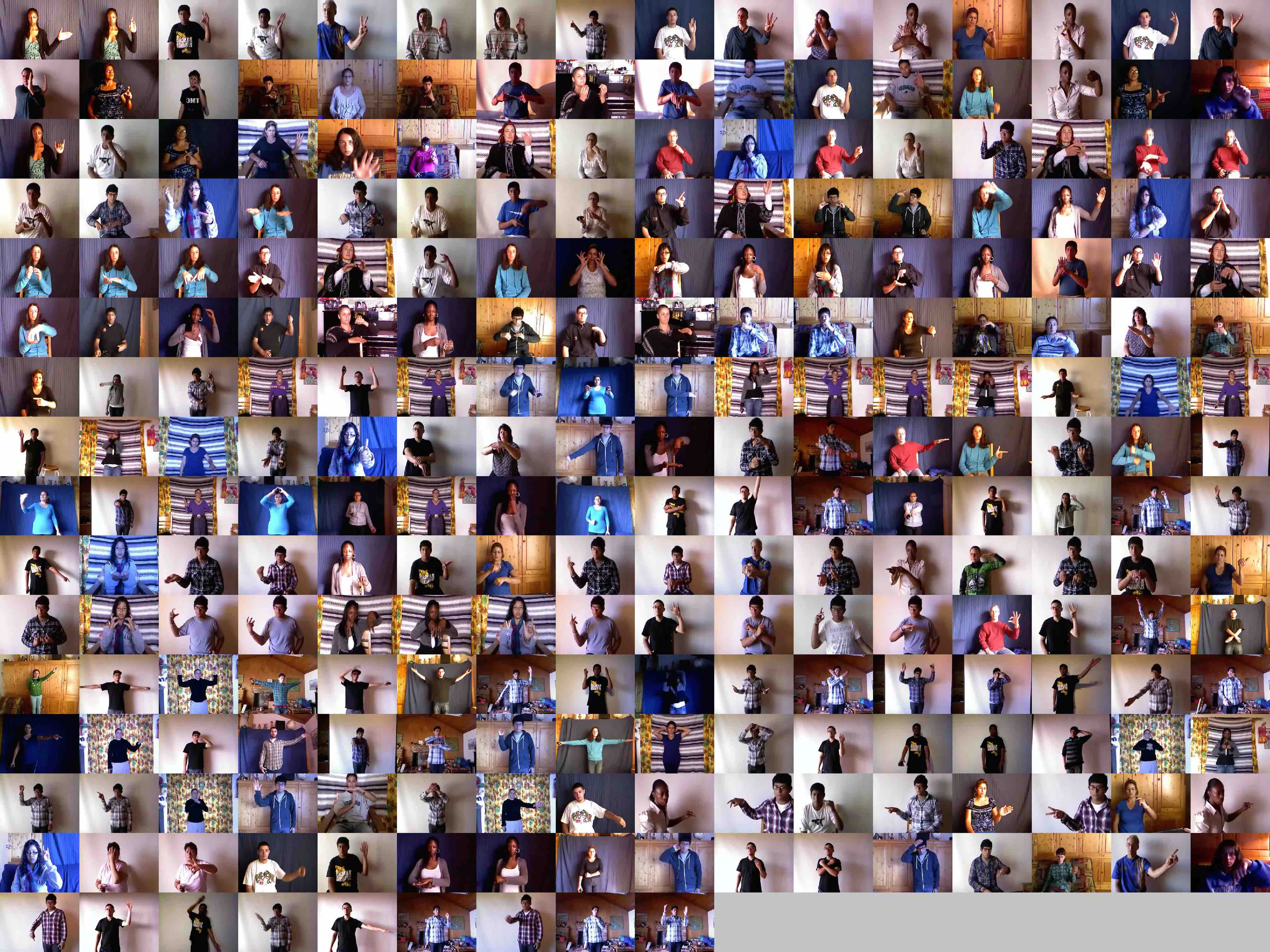

Owing to the limited amount of training samples on the released gesture datasets, it is hard to apply them on real applications. Therefore, we have built a large-scale gesture dataset: Chalearn LAP RGB-D Isolated Gesture Dataset (IsoGD). The focus of the challenges is "large-scale" learning and "user independent", which means vgestures per each class are more than 200 RGB and depth videos, and training samples from the same person do not appear in the validation and testing sets. The Chalearn LAP IsoGD dataset are derived from the Chalearn Gesture Dataset (CGD) [1] that is used on "one-shot-learning". Because the CGD dataset has totally more than 54,000 gestures which are split into subtasks. To reuse the CGD dataset, we finally obtained 249 gesture labels and manually labeled temporal segmentation to obtain the start and end frames for each gesture in continuous videos from the CGD dataset.

Database Infomation and Format

This database includes 47933 RGB-D gesture videos (about 9G). Each RGB-D video represents one gesture only, and there are 249 gestures labels performed by 21 different individuals.

The database has been divided to three sub-datasets for the convenience of using, and these three subsets are mutually exclusive.

| Sets | # of Labels | # of Gestures | # of RGB Vidoes | # of Depth Vidoes | # of Performers | Label Provided |

| Training | 249 | 35878 | 35878 | 35878 | 17 | Yes |

| Validation | 249 | 5784 | 5784 | 5784 | 2 | No |

| Testing | 249 | 6271 | 6271 | 6271 | 2 | No |

The test protocol are shown in the above table, which includes three subsets: train.txt for training set, valid.txt for validation set and test.txt for testing set.

train.txt ==> Training Set . Each row format: RGB_video_name depth_video_name GestureLabel

valid.txt ==> Validation Set. Each row format: RGB_video_name depth_video_name

test.txt ==> Testing Set. Each row fromat: RGB_videoname depth_video_name

The validation and testing sets are only provided the samples, no labels. Gesure labels are ranged from 1 to 249, if labels of videos were provided for that sub-dataset. Please note that the index of the labels starts from 1.

Main Task:

1) Isolate gesture recognition using RGB and depth videos

2) Large-scale Learning

3) User Independent: the uses in training set will not disappear in testing and validation set.

Publication and Result

To use both datasets please cite:

Jun Wan, Yibing Zhao, Shuai Zhou, Isabelle Guyon, Sergio Escalera and Stan Z. Li, "ChaLearn Looking at People RGB-D Isolated and Continuous Datasets for Gesture Recognition", CVPR workshop, 2016. [PDF]

The above reference should be cited in all documents and papers that report experimental results based on the Chalearn LAP IsoGD Database.

ISOGD 2017:

| Rank | Team | r (valid set) | r (test set) |

|---|---|---|---|

| 1 | ASU [7] | 64.40 | 67.71 |

| 2 | SYSU_ISEE | 59.70 | 67.02 |

| 3 | Lostoy | 62.02 | 65.97 |

| 4 | AMRL [6] | 60.81 | 65.59 |

| 5 | XDETVP-TRIMPS [5] | 58.00 | 60.47 |

| - | baseline | 49.17 | 67.26 |

ISOGD 2016:

| Rank | Team | Recognition rate r |

|---|---|---|

| 1 | FLiXT [4] | 56.90 |

| 2 | AMRL [2] | 55.57 |

| 3 | XDETVP-TRIMPS [3] | 50.93 |

| 4 | ICT_NHCI | 46.80 |

| 5 | XJTUfx | 43.92 |

| 6 | TARDIS | 40.15 |

| - | baseline [1] | 24.19 |

| 7 | NTUST | 20.33 |

| 8 | Bczhangchen | 0.45 |

Ref:

[1] Jun Wan, Yibing Zhao, Shuai Zhou, Isabelle Guyon, Sergio Escalera and Stan Z. Li, "ChaLearn Looking at People RGB-D Isolated and Continuous Datasets for Gesture Recognition", CVPR workshop, 2016.

[2] Wang, Pichao, et al. "Large-scale isolated gesture recognition using convolutional neural networks." Pattern Recognition (ICPR), 2016 23rd International Conference on. IEEE, 2016.

[3] Zhu, Guangming, et al. "Large-scale isolated gesture recognition using pyramidal 3d convolutional networks." Pattern Recognition (ICPR), 2016 23rd International Conference on. IEEE, 2016.

[4] Li, Yunan, et al. "Large-scale gesture recognition with a fusion of RGB-D data based on the C3D model." Pattern Recognition (ICPR), 2016 23rd International Conference on. IEEE, 2016.

[5] Zhang, Liang, et al. "Learning Spatiotemporal Features Using 3DCNN and Convolutional LSTM for Gesture Recognition." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017.

[6] Wang, Huogen, et al. "Large-scale Multimodal Gesture Recognition Using Heterogeneous Networks." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017.

[7] Miao, Qiguang, et al. "Multimodal Gesture Recognition Based on the ResC3D Network." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017.

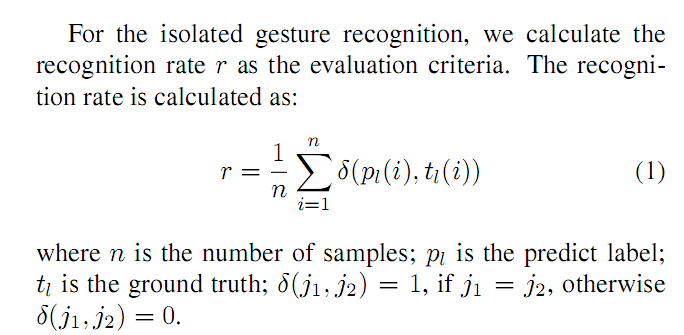

Evaluation Metric

Download Instructions

To obtain the database, please follow the steps below:

- Download and print the document Agreement for using Chalearn LAP IsoGD

- Sign the agreement

- Send the agreement to jun.wan@ia.ac.cn

- Check your email to find a login account and a password of our website after one day, if your application has been approved.

- Download the Chalearn LAP IsoGD database from our website with the authorized account within 48 hours.

Copyright Note and Contacts

The database is released for research and educational purposes. We hold no liability for any undesirable consequences of using the database. All rights of the Chalearn LAP IsoGD are reserved.

References

[1] Guyon, I., Athitsos, V., Jangyodsuk, P., Escalante, H. & Hamner, B. (2013). Results and analysis of the chalearn gesture challenge 2012.

Contact

Jun WanAssistant Professor

Room 1411, Intelligent Building

95 Zhongguancun Donglu,

Haidian District,

Beijing 100190, China.

Email:jun.wan at ia.ac.cn

joewan10 at gmail.com